Recommended[1] | Source[2]

Supplementary reading to the research paper

RAGate: A Gating Model

- RAGate models conversation context and relevant inputs to predict if a conversational system requires RAG for improved responses.

- Conclusion: Effective application of RAGate in RAG-based conversational systems identifies when to use appropriate RAG for high-quality responses with high generation confidence.

Validation of RAGate

- Experimentation: Conducted on KETOD (an enriched Task-Oriented Dialogue dataset based on SGD) spanning 16 domains.

- Findings: Without external knowledge, early-stage conversations were more natural and diverse—showing that misapplied knowledge can harm experience.

🧠 Understanding Conversational System Evolution

Definition

Integrating LLMs into conversational systems means using pre-trained models to power response generation, dialogue flow, and intent interpretation.

Capabilities of LLMs

- Context understanding

- Coherent response generation

- Basic reasoning over input sequences

Traditional Conversational Systems

- Rule-based systems (rigid, manual)

- Template-based responses (non-generative)

- State-machine driven dialogue flows (cumbersome)

- Simple ML models (Naive Bayes, SVMs)

- Information-retrieval based (non-generative, high dependency on database)

Advantages of Traditional Systems

- Cost efficient

- Deterministic behavior (ideal for critical use-cases)

- Low-latency, especially for embedded or constrained environments

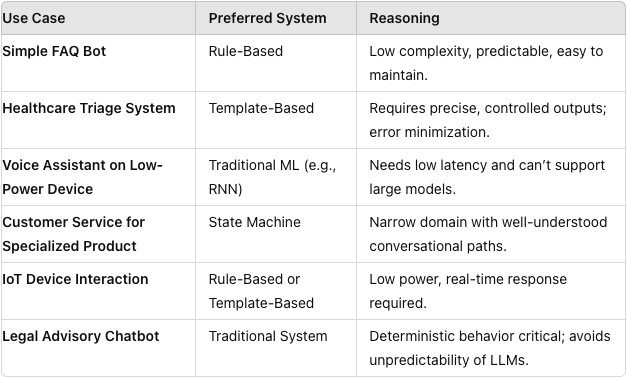

Example Use Cases

📡 RAG in Conversational Systems

Why RAG?

- Augments LLMs with real-time knowledge retrieval

- Grounds generation with contextual facts

- Improves trust, accuracy, and adaptability

Types of RAG

- Single-pass RAG

- Iterative RAG

- Knowledge-Enhanced RAG

- Hybrid RAG

- Memory-Augmented RAG

- Cross-Attention RAG

- Modular RAG

- Task-Specific RAG

⚙️ Efficiency Enhancements & RAG Infrastructure

Dense Passage Retrieval

Uses dense embeddings for better semantic matching vs. sparse approaches (e.g., TF-IDF).

Public Search Services

- Elasticsearch

- Google Cloud Search API

Task-Oriented Dialogue (TOD) Systems

Models aiming to maximize likelihood over multi-domain task data.

SURGE (Subgraph RAG)

Combines contrastive learning with subgraph retrieval to improve grounding.

[1] Elvis from X | [2] Adaptive Retrieval-Augmented Generation for Conversational Systems